Artificial Intelligence (AI) and Machine Learning (ML) projects are exciting—until they fail before they even reach production. Surprisingly, one of the most common reasons for failure isn’t the algorithm, the infrastructure, or even the budget—it’s poor data governance.

For companies relying on AI/ML, especially in 2025’s competitive environment, data governance is no longer optional—it’s a survival skill. In this blog, we’ll explore what data governance means, why it’s critical, and the five major ways poor governance can sabotage AI/ML projects before launch.

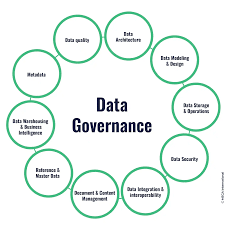

What is Data Governance in AI/ML?

Data governance refers to the framework of policies, processes, and responsibilities that ensure data is accurate, consistent, secure, and usable.

In AI/ML, it covers:

- Data quality standards (accuracy, completeness, timeliness)

- Data ownership and accountability

- Compliance with privacy laws (GDPR, HIPAA, etc.)

- Clear data access controls

- Documentation of data sources and lineage

Without this structure, an AI/ML model might learn from flawed or biased data, making it unreliable—or even harmful—when deployed.

1. Poor Data Quality Leads to Wrong Predictions

An AI model is only as good as the data it learns from. If the dataset contains inaccuracies, duplicates, outdated records, or mislabeled examples, the model’s predictions will be flawed.

Example:

A retail company trained a recommendation engine on sales data without cleaning it first. The data included duplicate entries and missing purchase histories. When launched in testing, the AI recommended irrelevant products, frustrating customers and hurting sales forecasts.

Impact on AI/ML Projects:

- Increased error rates in predictions

- Poor customer experience

- Loss of trust in the system

Solution:

Implement automated data profiling and validation checks before model training. Use tools like Great Expectations or TensorFlow Data Validation to catch quality issues early.

2. Lack of Compliance Can Halt Deployment

In 2025, privacy laws and AI regulations are stricter than ever. If your AI model uses personal or sensitive data without meeting compliance requirements, legal teams (or regulators) can stop the project in its tracks.

Example:

A health-tech startup collected patient data from multiple hospitals but failed to anonymize it according to HIPAA standards. The project was ready to deploy but got blocked by legal teams until they rebuilt the data pipeline—delaying launch by six months.

Impact on AI/ML Projects:

- Costly project delays

- Legal penalties and fines

- Damaged brand reputation

Solution:

Set up a compliance checklist during data collection. Use privacy-preserving techniques like data masking, tokenization, and differential privacy.

3. Inconsistent Data Formats Break the Pipeline

AI/ML pipelines often rely on data from multiple sources—databases, APIs, CSV files, IoT devices, etc. Without governance, these datasets can have inconsistent formats, making integration a nightmare.

Example:

A logistics company tried to predict delivery delays using data from trucks, warehouses, and customer service. But each system recorded dates, times, and locations differently. The ML team spent weeks just cleaning and converting data formats—delaying the project launch.

Impact on AI/ML Projects:

- Increased development time

- Higher data engineering costs

- Risk of broken integrations after deployment

Solution:

Define a master data standard and enforce it across all data sources. Use ETL (Extract, Transform, Load) automation tools like Talend or Apache NiFi.

4. No Clear Data Ownership Creates Chaos

When no one is clearly responsible for the accuracy and availability of data, issues pile up. Data scientists spend more time chasing down sources and approvals than building models.

Example:

An e-commerce platform was building a fraud detection model but couldn’t identify who maintained certain customer transaction tables. The delays in getting approvals meant the project missed its planned holiday season launch.

Impact on AI/ML Projects:

- Slow decision-making

- Accountability gaps

- Risk of data becoming outdated or unavailable

Solution:

Assign data stewards—individuals responsible for each dataset. Use data catalogs like Collibra or Alation to track ownership and metadata.

5. Missing Data Lineage Leads to Trust Issues

Data lineage is the record of where data came from, how it’s transformed, and where it goes. Without it, teams can’t explain why the AI made certain predictions—making it impossible to debug or meet explainability requirements.

Example:

A bank built a loan approval AI, but regulators asked for proof of how applicant data was processed. Without documented lineage, the bank had to pause the project and rebuild documentation retroactively.

Impact on AI/ML Projects:

- Regulatory non-compliance

- Difficulty debugging models

- Loss of stakeholder trust

Solution:

Implement lineage tracking from day one using tools like Apache Atlas or DataHub.

Case Studies: Poor Data Governance in Action

Case Study 1: AI Recruitment Tool Gone Wrong

A large tech firm built an AI to shortlist candidates but didn’t check for bias in historical hiring data. The model learned gender and ethnic biases from past data and had to be scrapped after media backlash.

Lesson:

Data governance isn’t just about compliance—it’s about ethical AI.

Case Study 2: Smart City Traffic Predictions Fail

A smart city project used traffic sensor data to optimize signals but didn’t standardize time formats. The model output incorrect predictions, worsening congestion until the data governance framework was overhauled.

Lesson:

Data format consistency is crucial for real-time AI systems.

How to Build Strong Data Governance for AI/ML Projects

- Create a Governance Framework Early – Don’t wait until deployment to think about governance.

- Automate Data Quality Checks – Detect and fix issues before training models.

- Assign Clear Roles – Make sure every dataset has an owner.

- Document Everything – Maintain lineage, transformations, and access logs.

- Align Governance with Business Goals – Keep governance practical, not bureaucratic.

Final Thoughts

In AI/ML, data is your foundation. Without governance, even the best algorithms collapse. As AI regulations tighten and competition grows in 2025, poor data governance isn’t just a risk—it’s a guaranteed failure point.

By investing in governance from day one, businesses can avoid delays, reduce costs, and launch AI projects that truly deliver value.